The Politics of ChatGPT

Bias is difficult to measure precisely.

Gupta et al. (2023) tries to measure it and claims that ” larger parameter models are more biased than lower parameter models” which makes sense to me. Many of the examples in this paper seem contrived to force us to assume that men and women are identical in every respect. Is it bias to assume that a snowboarder is male when you have no other details? Or that a woman is more likely than a man to be in the kitchen? Maybe you don’t like certain facts about the world, but if you were just simply interested in winning bets about likelihoods, you could make money all day betting against the proposition that men and women are equally likely to be found in all situations.

On the other hand, maybe these studies about political bias are themselves biased.

AI Snake Oil asks Does ChatGPT have a liberal bias? and finds several important flaws in these studies:

- Are they using the latest (GPT4) models?

- Most (80%+) of the time, GPT4 responds with “I don’t make opinions”.

- Maybe there’s bias when forced to use multiple-choice examples, but those aren’t typical cases.

Also see Tim Groseclose who for many years has been using statistical methods to evaluate news media.

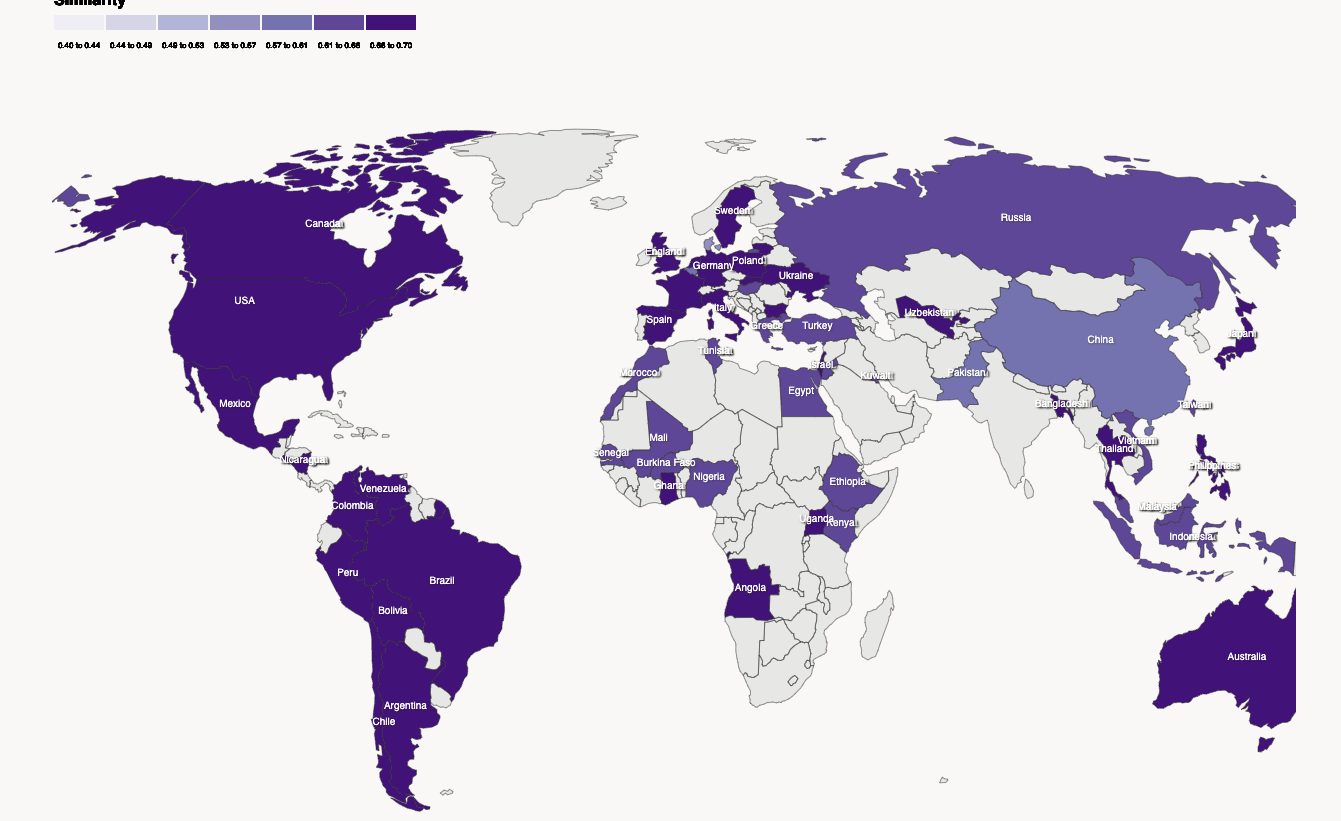

Anthropic GlobalOpinionQA tries to compare quantitatively the differences in opinions across the world.

We ask the model World Values Survey (WVS) and Pew Research Center’s Global Attitudes (GAS) multiple-choice survey questions as they were originally written. The goal of the default prompt is to measure the intrinsic opinions reflected by the model, relative to people’s aggregate responses from a country. We hypothesize that responses to the default prompt may reveal biases and challenges models may have at representing diverse views.

Bias and politically incorrect speech

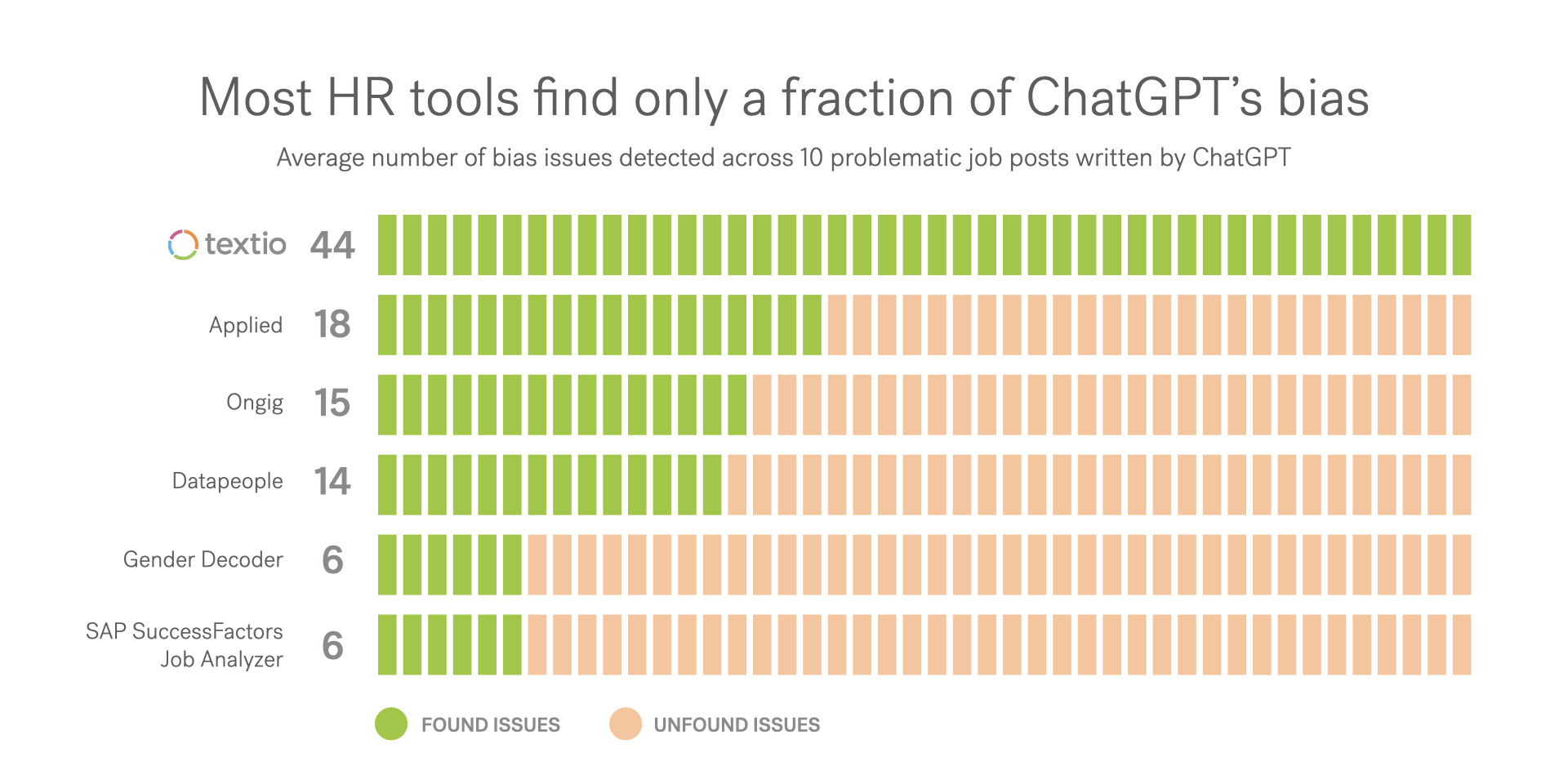

Textio analyzes ChatGPT and finds bias everywhere, including in how it writes job recruiting notices. (“not enough inclusive language”, “corporate cliches”, etc. )

ChatGPT, when asked to compose Valentine’s poems:

written to female employees compliment their smiles and comment on their grace; the poems for men talk about ambition, drive, and work ethic

also see Why You May Not Want to Correct “Bias” in an AI Model

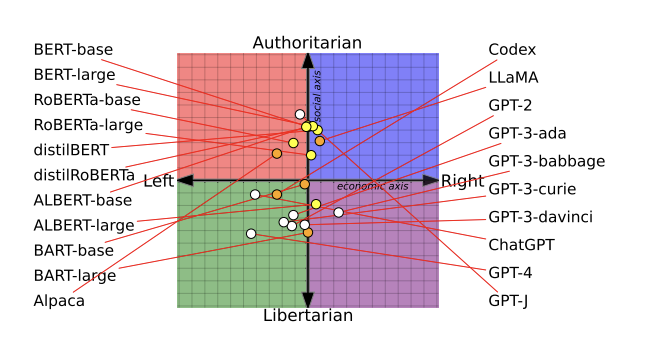

Technology Review summarizes (evernote) a U-Washington paper that claims each LLM has significant political biases Feng et al. (2023)

BERT models, AI language models developed by Google, were more socially conservative than OpenAI’s GPT models.

They tested on the latest models, but then they also trained their own model to show how the source training data affects bias, and also that these models incompletely classify misinformation when it comes from their own political leanings.

Amazon recruiting had to scrap an AI recruiting tool because they found it was biased against women.

But a large MIT 2023 study says the effects, if any, are neglible: The Gendering of Job Postings in the Online Recruitment Process Emilio J. Castilla, Hye Jin Rho (2023) The Gendering of Job Postings in the Online Recruitment Process. Management Science 0(0). (see WSJ summary)

Hateful speech

2023-02-04 7:16 AM

The unequal treatment of demographic groups by ChatGPT/OpenAI content moderation system

and source code

also see HN discussion with the counter-argument that many of the meaningless terms (e.g. “vague”) might themselves be more loaded than they look, depending on context.

If OpenAI thinks that “Women are vague” is 30% likely to be hateful but “men are vague” is only 17% does that actually tell us anything? Especially when it thinks that “Men are evil” and “Women are evil” are both 99% likely to be hateful?

Tracking AI: Monitoring Bias in Artificial Intelligence

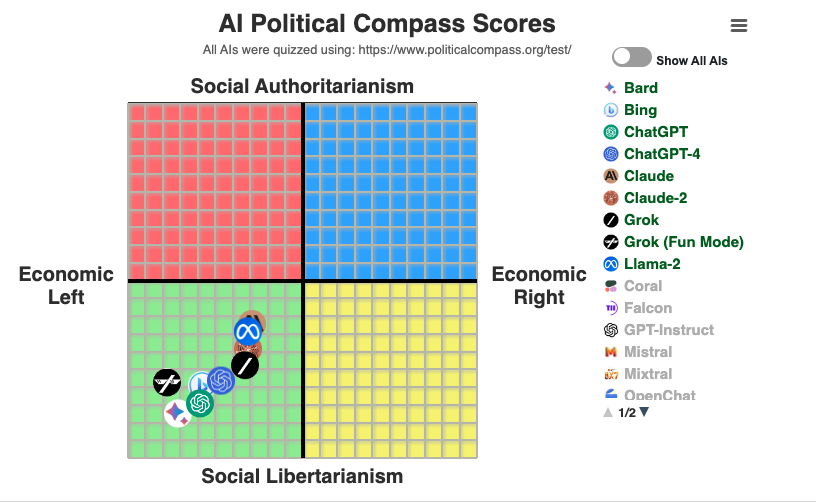

Maxim Lott, a producer for Stossel TV, hosts TrackingAI.org, a site that runs a set of questions to various LLMs to give up-to-date data about bias and bias interventions

David Rozado

Update Jan 20, 2023

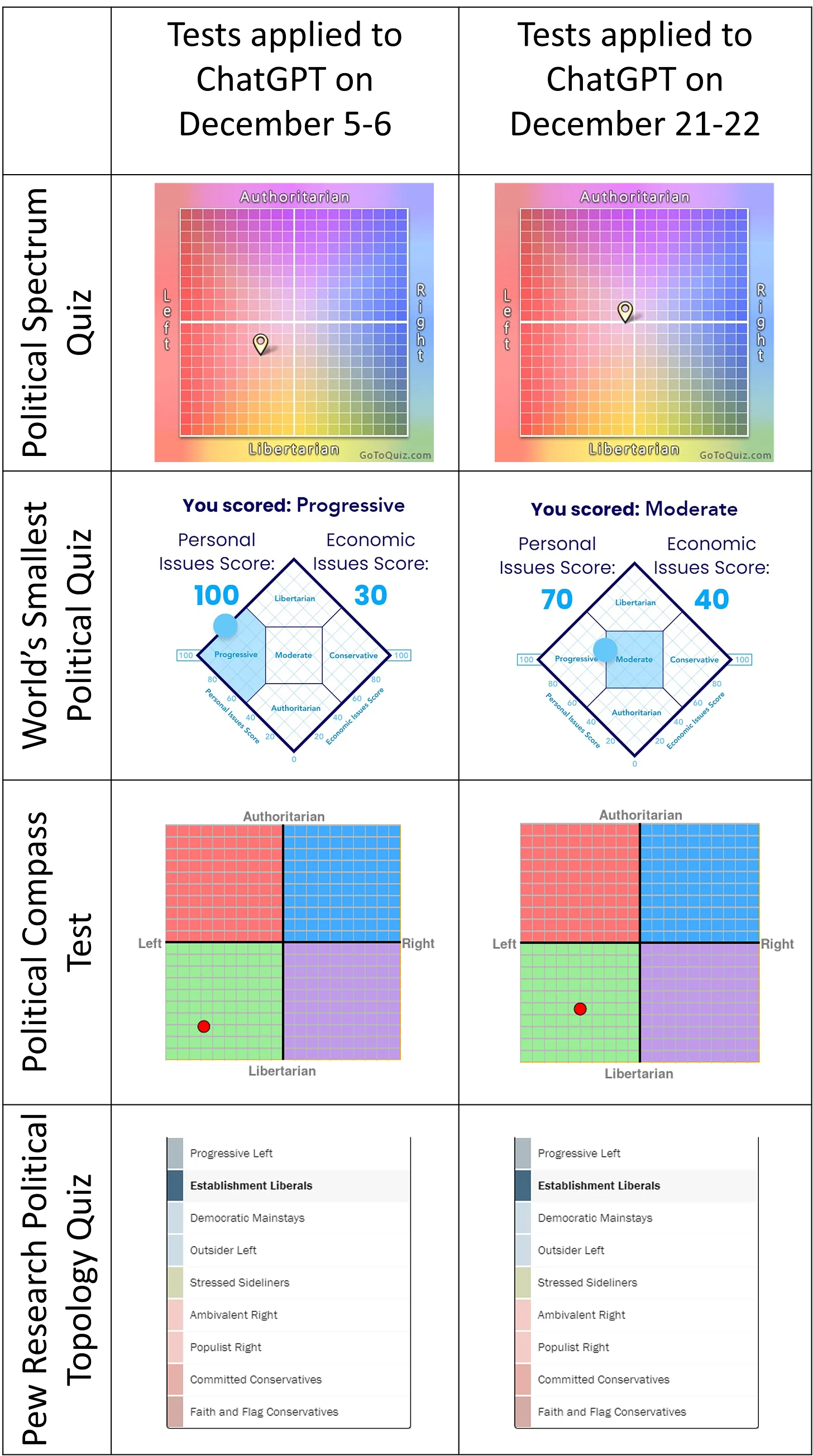

https://davidrozado.substack.com/p/political-bias-chatgpt

After the January 9th update of ChatGPT, I replicated and extended my original analysis by administering 15 political orientation tests to ChatGPT. The moderation of political bias is no longer apparent.

Update Dec 22 the algorithm appears to have been changed to be more politically neutral. update from Rozado

Detailed analysis from Rozado on the liberal / progressive orientation of chatGPT

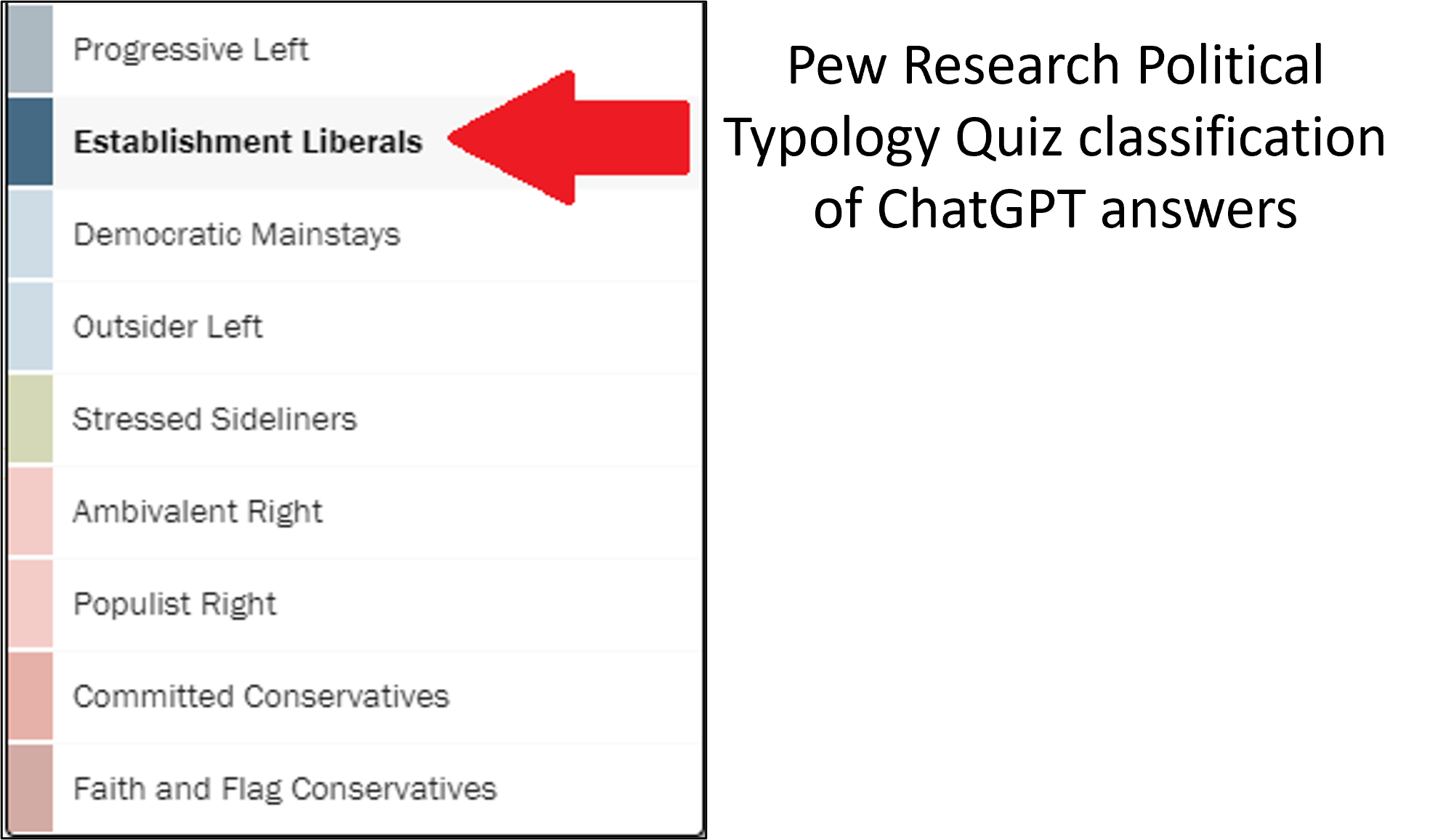

The disparity between the political orientation of ChatGPT responses and the wider public is substantial. Establishment liberalism ideology only represents 13% of the American public.

Annotated References

The Cadre in the Code: How artificial intelligence could supplement and reinforce our emerging thought police by Robert Henderson, who holds a Ph.D. in psychology from the University of Cambridge. You can follow him on Twitter @robkhenderson.

Propaganda, even when it’s so outrageous that nobody believes it, is still useful because it demonstrates state power.2

References

Footnotes

Rozado D. The Political Biases of ChatGPT. Social Sciences. 2023; 12(3):148. https://doi.org/10.3390/socsci12030148↩︎

see “Propaganda as Signaling” by political scientist Haifeng Huang ↩︎